We have just reached a new milestone on GOV.UK Verify - our 100th round of usability testing.

100 rounds of usability testing is certainly a lot. Here are some numbers to put it into context:

- 600 users

- 600 hours in the lab

- 500 hours of analysis

- 200 hours of presenting results and prioritising issues

- 30,000 sticky notes

And that’s not all: those 100 rounds are in addition to a range of other research we do: large scale remote usability testing, contextual research in users’ homes and job centres; customer support queries and feedback; analytics; A/B testing; accessibility testing.

Why so much research?

Traditionally, some companies have often only conducted one round of usability testing on a product or service to fix issues before launch. While some research is better than no research, I would argue that there is limited value in testing a product once, “fixing” the issues, and launching it. How do you know that the solutions you have come up with have addressed the issues you observed, unless you test it again? And what if you discover a major problem for users but it's too late for you to fix it?

At GDS, we believe that continuous iteration of research is the key to building services that meet user needs.

Our Service Design Manual states that user research should be done in every iteration of every phase - starting in discovery and continuing through live.

Through an iterative design and testing process, we can constantly assess our service to understand if it meets user needs, test new design ideas, identify new issues, and check if any previous issues have been solved.

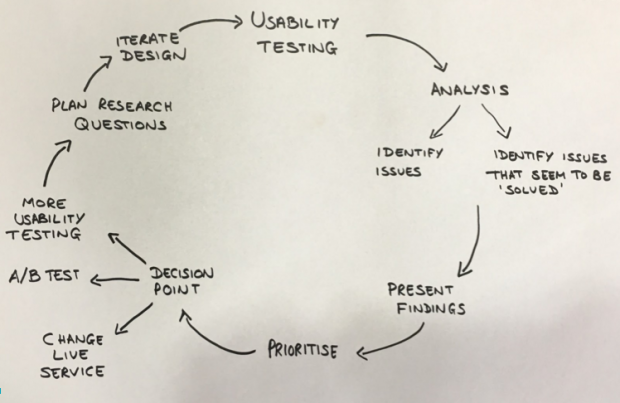

This diagram shows the iterative user research process that we follow in our work. It also demonstrates that, once issues are solved we can A/B test to quantify whether these solutions work at scale, or push the solutions into the live service.

This quantity of research does come with some challenges...

Doing 100 rounds of usability testing means that we have a really detailed understanding of our user needs, and a lot of insight into what works and what doesn’t work in terms of designing the GOV.UK Verify journey.

However, it also comes with a number of challenges:

- Clear documentation and effective indexing is essential so that it’s possible to understand what design solutions have been explored in the past and what was learned; understand why particular design decisions have been made; go back and explore issues that may not have been in focus at the time

- Staff changes over the 100 rounds mean that knowledge can be lost (making the documentation particularly critical)

- Keeping the wider team engaged when, sometimes, what we are doing is only incremental. For this, it’s essential to share the longer term goals of what we are working towards so they can understand the context

- Having the courage to go back to design ideas that may not have worked in the past to see if attitudes may have changed, for example attitudes to social media.

What has changed as a result of research?

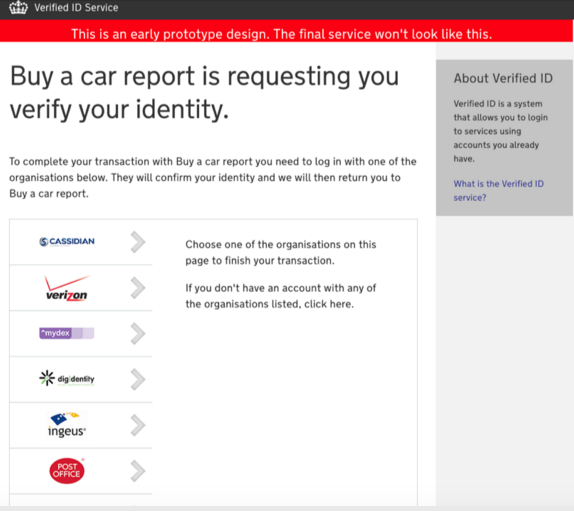

Here’s what GOV.UK Verify looked like in December 2012, before usability testing started:

And here’s what it looks like now:

100 rounds is just the beginning…

GOV.UK Verify is live, but we are not finished doing research. We will continue to iterate our design and do continual testing, and keep working to make the service as simple and straightforward as possible for users.

For regular updates on GOV.UK Verify’s development, subscribe to the blog.

6 comments

Comment by Fiona posted on

Hello, I'm interested in hearing more about how you document/index the research that you are doing to keep track of progress and developments over time. Is that something that could be shared?

Comment by Emily Ch'ng posted on

Hi Fiona,

Thanks a lot for your comment. I am not completely sure if you'd like to hear more about our methods of documentation that we use to keep track of such large volumes of research findings, or the actual findings themselves - so just in case, I’ve got an answer for both!

Our method of documentation relies on having a set of clearly articulated research questions at the start of every iteration. After each day in the lab we write a report - in the form of a presentation - on everything we learned about each research question that week. We present this to the team and collectively decide what we're going to do next about each finding. We then store that presentation alongside the other documentation about that iteration. This forms a chronological file system. We also use a Trello board that organises findings according to research question so we can browse documents according to topic, rather than date.

It's evolving and improving - we plan to blog about it in detail in the future.

In regards to the actual findings themselves - as mentioned above, they always relate very specifically to our research questions of the week. We use blog posts to summarise findings over a period of research. For example, in the past we have blogged about our research into accessibility, how our research feeds into government design standards and what we have learned about user needs through research.

You can find these blog posts here:

Accessibility: https://identityassurance.blog.gov.uk/2016/06/02/accessibility-research-for-gov-uk-verify/

Design standards: https://identityassurance.blog.gov.uk/2016/03/31/gov-uk-verify-and-the-government-design-standards/

User needs: https://identityassurance.blog.gov.uk/2015/07/24/gov-uk-verify-how-we-talk-about-user-needs/

Comment by Maria posted on

Hi,

Thanks a lot for the article, it's really interesting.

Could you explain if the team works with open, maybe more vague questions, that can grow and evolve, or if they are more closed, defined and related to e.g. metrics?

Do you use any system to determine what method to use to get what answers best? I usually write questions based on hypothesis and assumptions and then later figure out what methodology is best. I'm thinking about starting a system to determine that that but don't have anything yet.

Thanks a lot for sharing your knowledge.

I'm really interested because I currently work with questions and I would like to see how others are doing it.

Comment by Lorna Wall posted on

Hi Maria,

I’m really glad you enjoyed it.

In terms of the questions, we mostly start with more open ones which grow and evolve over time. We often have the same research question for a number of test rounds, and that gives us the chance to iterate the question too.

Once we have a solution we are fairly confident with in the usability lab, we aim to A/B test it with a clear hypothesis which we can test with metrics.

There are times where we have more closed research questions, such as when we were assessing the quality of certified companies’ user journeys before connecting them to Verify. In those cases we had expected outcomes which we assessed them against. You can read about this here: https://userresearch.blog.gov.uk/2016/06/08/choosing-the-best-methods-to-answer-user-research-questions/

The above blog post hopefully answers your second question too, as it explains the system we use to work out which research method to use to answer each question.

Thanks,

Lorna

Comment by Joe posted on

Hi - when starting to decide the research questions that you want to explore in your next iteration, is that done in sprint planning?

Comment by Lorna Wall posted on

Hi Joe,

Our research cycle is a 2 week cycle which goes as follows:

Monday week 1: Prepare for lab

Tuesday week 1: Testing

Wednesday week 1: Analysis

Thursday week 1: Report results back to Verify programme

Friday week 1 - Friday week 2: Iterate design

After the results session on a Thursday, we define our research priorities with the key stakeholders from the Verify programme and plan the research questions for the next iteration.

I hope that answers your question, but please ask if you need more information.

Lorna